-

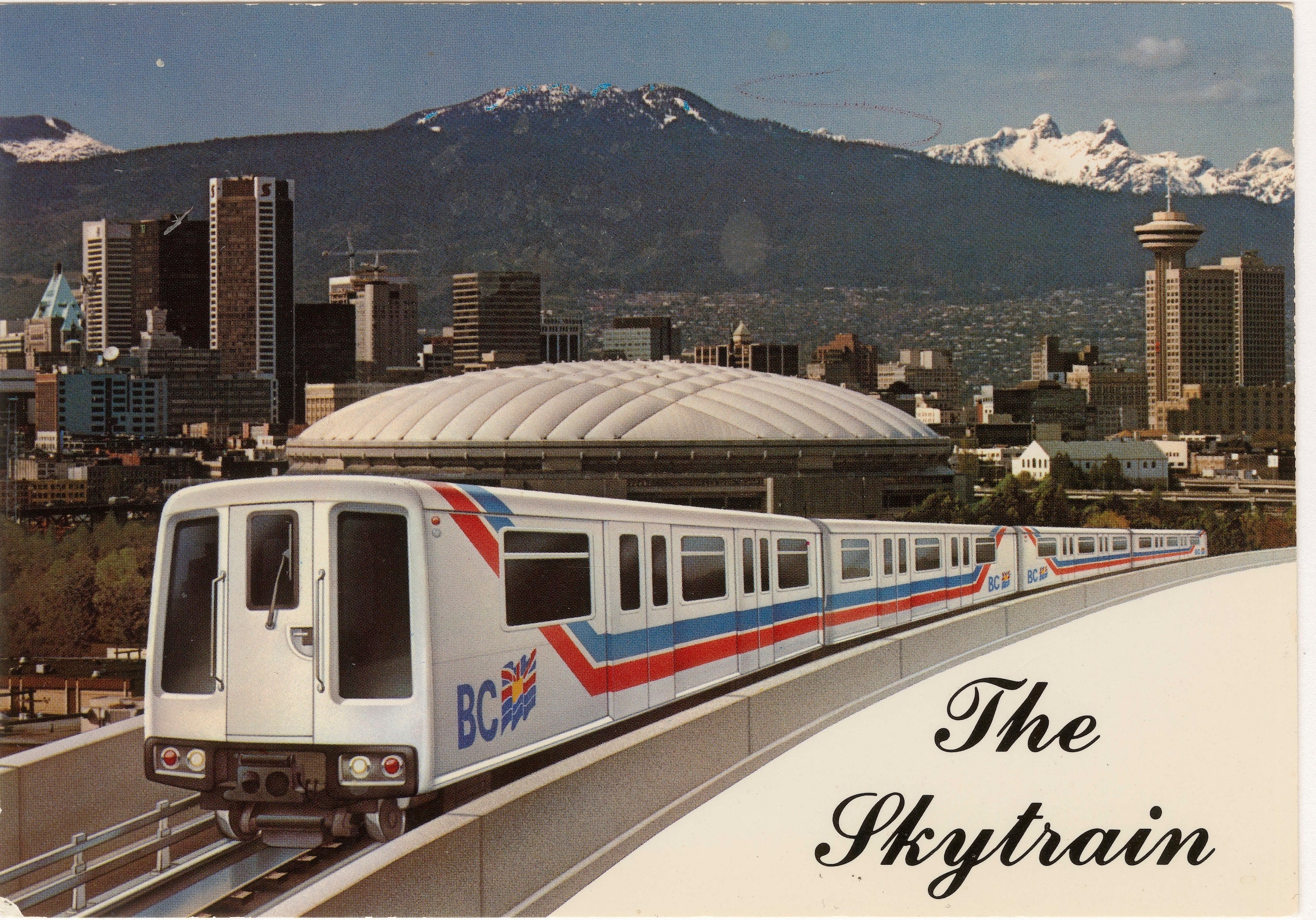

This was the first SkyTrain car

As we are now in the midst of the end of life for SkyTrain’s Mark I train sets, I want to make a small confession: I am not fond of them. Sure. They’re historically important to Metro Vancouver and have made a huge contribution to improving the quality of life within the region, but I just simply don’t like riding them.

My complaint is really petty: they’re technologically advanced at their debut, but when you look at the significant improvements the trains in later generations brought, I really have a hard time caring to ride them on the regular.

Despite their iconic sound that I do happen to enjoy, they’re otherwise loud and during the summer extremely uncomfortable due to a lack of air conditioning. They also do not have the smoothest ride at their top speed. I really lament seeing one of these trains show up when they arrive.

If this upsets you, I am sorry, but this is one woman’s opinion and you’re welcome to think she’s wrong. However, if you think that they’re the first generation of SkyTrain cars, I am going to tell you that you’re mistaken and that they’re technically the second.

Let me explain

The origin of the SkyTrain system starts in Ontario. The Urban Transportation Development Corporation (UTDC) developed the Intermediate Capacity Transit System (ICTS) and were looking to sell.

The government in charge in British Columbia at the time expressed interest and after watching them operate through the test track in Kingston, Ontario, they agreed to build a system with an initial test track of BC’s own extending from a station built at Terminal and Main in Vancouver to about Cattrell Street, just over a kilometre east.

Free rides were offered to the public from what eventually became Main Street-Science World Station to a dead-end towards the east with the trains travelling the tracks hovering over Terminal Ave to their very end and then backtracking (no pun intended). The point was to show the public the technology and get people onboard with having rail transit after it being absent for two and a half decades.

The thing about the trains used on these tracks is that while they were the first to arrive in Vancouver and were branded in the original BC Social Credit-style livery, they were not trains 001-002 as seen above. The current 001-002 set you see on the SkyTrain network today are actually the second set to be delivered to Vancouver.

So what happened to the original set then?

One thing that you may notice in the above photo is that its numbered as BC1 instead of 001. Another thing you can catch are a completely different indicator lights.

That is right. It’s a completely different train.

The BC1-BC2 set was only running on the SkyTrain track for the summer of 1983. Once the demonstration period was over, the train set was sent back to Ontario and all branding was removed. The delivery of what we would eventually call the Mark I came with different trains which featured different lights and seating arrangements.

This train set was used heavily in anything showing off the train and yet it wasn’t ever meant to be a permanent resident of the SkyTrain system.

However, it did not mean it was a goodbye when it left back to its home.

It is my understanding that one of the cars from the BC1-BC2 train set returned to Metro Vancouver as TV06. The car was lengthened, given an extra door, and was tested briefly on what is now the Expo Line around 1991.

For whatever reason, TV06 never materialised as a model for the 1996 order of sets, but the 2001 order of the Mark II trains made that irrelevant anyway.

If you go out to Alstom’s (formerly Bombardier, formerly UTDC) plant in Kingston, Ontario, you can still see TV06 and the original BC1 train in a different livery floating about the test track.

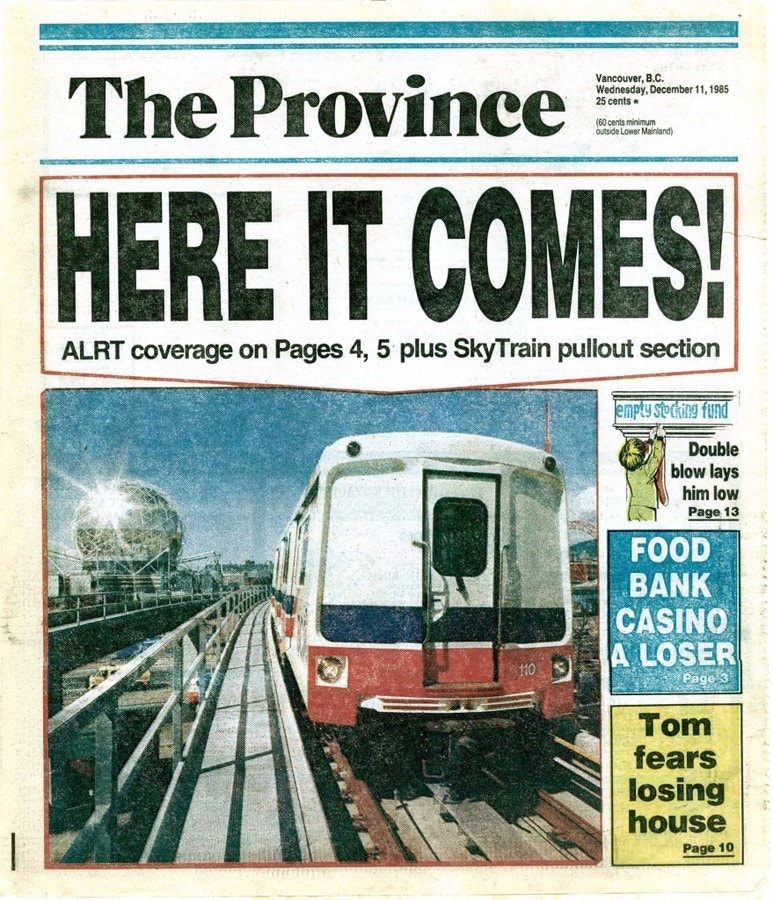

Fortunately when the SkyTrain did launch, the media used photos of actual trains the system were to use, but somehow still mixed up “ALRT” and “SkyTrain” when referring to it. One day I should look into and talk about how these terms were used interchangeably.

-

Trout Lake and the British Columbia Electric Railway

Yesterday, I found myself outside in the -8 C cold walking with a friend through John Hendry Park, home to a Vancouver-favourite, Trout Lake.

John Hendry was a British Columbian lumber magnate who set up a mill at Trout Lake. In 1926, the land was donated to the Vancouver Park Board by his daughter, stipulating that the new park be named after his father. Good or bad, very few locals refer to it by its official name and call it “Trout Lake” due to the park’s notable feature.

So what does this have to do with the BC Electric Railway?

Today, the park sits near the Expo Line between Commercial-Broadway and Nanaimo stations. This of course means it was once served by the Central Park Line of the old BCER by way of Lakeview Station, located at where SkyTrain meets with Victoria Drive.

Lakeview is not an unremarkable station as it found itself the site of one of the worst transit disasters seen in Vancouver history.

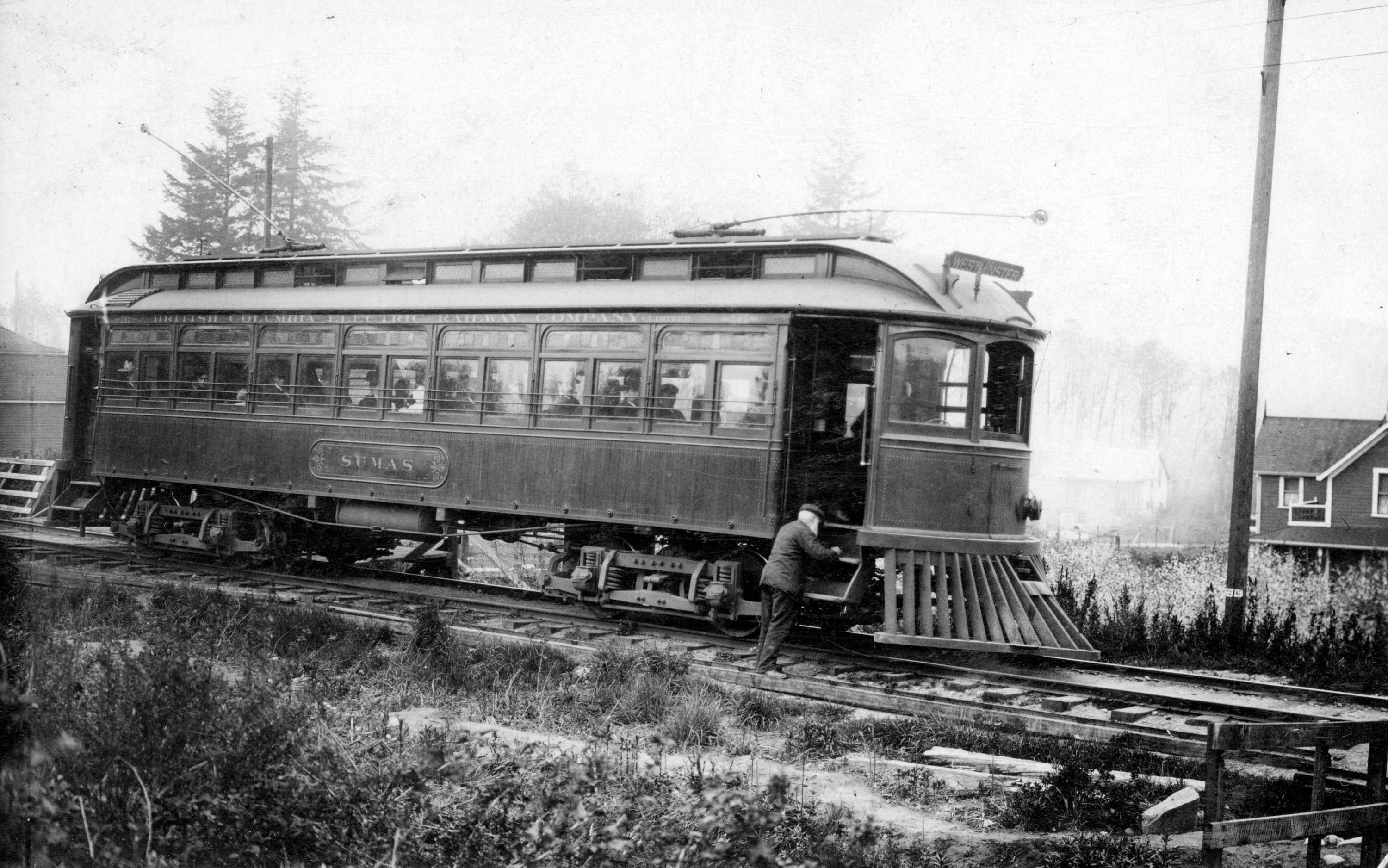

On November 10th, 1909, BCER interurban train “Sumas” collided with a flatcar containing logs destined for an iron foundry located not far uphill at Nanaimo and 24th Avenue. The collision instantly killed 14 passengers and only spared 9.

Interestingly, the BCER did restore the car in 1910. However, when the interurbans were retired in the 1950s, Sumas was not spared.

With the interurbans being scrapped in the 1950s and the expansion of suburbia into the neighbourhood surrounding the park, the railway ceased to become active, with regular freight service terminating at what is now Joyce-Collingwood Station.

However, in 1985, SkyTrain took over that right of way and Trout Lake found itself connected via rail once again.

-

Our first en-masse scrapped trains

Recently, I learnt that train pairs 133-134 and 143-144 were sent to the scrap yard during the autumn of 2023–the first pair were retired in 2020. These trains mark the beginning of the retirement of the original Mark I SkyTrain vehicles to be replaced by the Mark V, which are presently being delivered through into 2027.

The interesting part of the retirement of these trains is that they were not part of the original 1985 sets. Trains 133-134 were delivered as part of the 1991 order and 143-144 as part of the 1996 set.

I read that the reason 133-134 were retired was due to them being in a state of irreparability, meaning that it wasn’t economical to continue the operation of these trains. Neither of these sets received a new splash of paint or upgrades as part of the fleet overhaul from a few years ago.

If you’re wondering, some of these trains did have names. 133-134 had the names “Spirit of Peace River” and “Spirt of Zeballos” respectively. The other set did not as none in that order never received any. At some point I should write about the topic of names behind some of the SkyTrain vehicles..

However, while we are retiring these trains in favour of new ones, I want to touch on a subject about when we did retire trains to the scrapyard with nothing in kind or better to take their place.

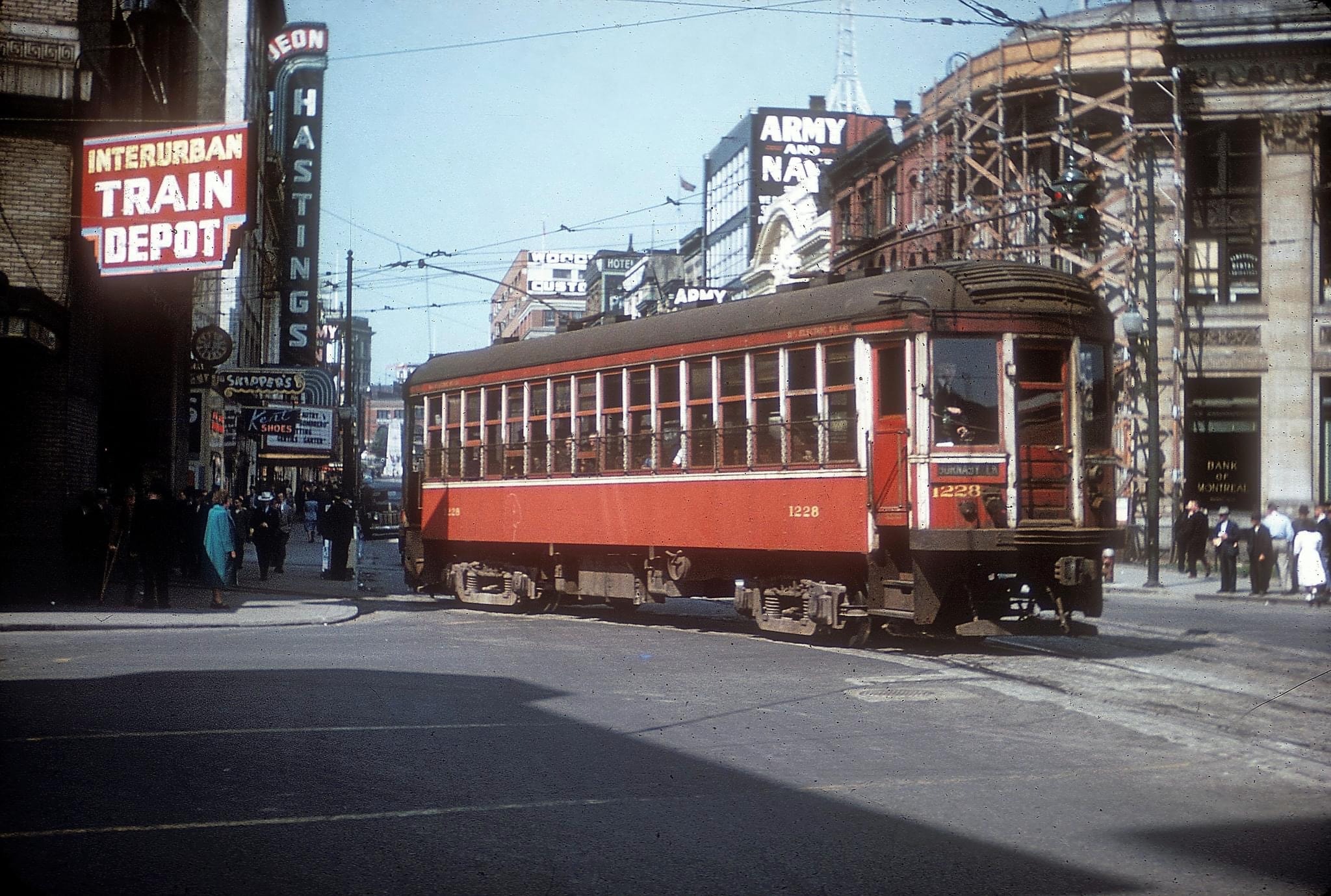

As I have written about before, the BC Electric Company retired its interurban and tram lines during the 1950s. This was part of its rail to rubber initiative to replace all rail service operated by the BCER with either diesel or electric trolley buses.

So what became of the retired trains?

Unfortunately many of the trains were scrapped in the most environmentally-sound way possible for the 1950s; stack them atop of each other under the Burrard Street Bridge and set them ablaze. Some did not suffer this fate but were repurposed into housing and storage. The tiny home craze started in the 1950s, not the 2010s.

Of the trams and interurbans which were scrapped, only so many were saved.

Three of the trams survived with one of them going to a museum in North Vancouver, another to a tramway society in Nelson (located in the British Columbian interior), and a third one is in Gastown at a factory that serves spaghetti.

The interurbans themselves were a bit more lucky as seven survived. Most of them were scattered about in Metro Vancouver and the Fraser Valley, with one of them sent to a museum in Ottawa. The odd time they still roam the original Fraser Valley Line albeit with a diesel generator behind them.

In addition to the trains being scrapped, a handful of stations survived. Both the New Westminster and Downtown Vancouver barns were spared but are no longer in use as anything resembling rail infrastructure, but the station in Chilliwack did not share that fate. Some of the intermediate stations were spared and were given to museums, but most were scrapped or left abandoned.

Vorce Station is probably the most pristine example of an intermediate station, which was rescued from the Burnaby Lake Line and is now on display at the Burnaby Village Museum.

Coming back to the present and the remaining Mark I trains, it’s hard to know what is being planned for these trains especially in light of two sets having been unceremoniously scrapped. Some really interesting ideas have been thrown about on what to do with them and one in particular I like is the idea of repurposing them into mini cafes.

If you think my idea of a coffee shop called the “Expresso Line” in honour of the Expo Line is interesting, let me know if you want to halvesies on this golden opportunity.

-

Me and streaming on Twitch

As you might have noticed, I haven’t streamed on Twitch much in the past two months. There have been good reasons for that and it stems from the fact that I have been getting out more often as of late.

I started to stream regularly in late 2019 at the suggestion of a friend and found myself to having good time. Back then, I was recovering from an operation and needed something to do to occupy myself. However, just as I was coming around the corner on healing, the pandemic hit and my options to do things like play sports again evaporated.

Prior to all of this, I was regularly rollerskating and was priming myself to play roller derby in some fashion. However, an injury during a skirmish led to a limited ability to participate in physical activity again and when combined with an unrelated operation and a pandemic, my desire to play sports was greatly diminished.

So from 2020 onward, I would finish work and then prepare to stream whatever content I felt like streaming. This resulted in me speedrunning, playing RPGs, trying to get through the entire Game Gear library, and of course completing the entirety of Ace Attorney.

However, in late 2022 during Flame Fatales, I realised that streaming and the culture around it really was taking its toll on me. I made a decision to step back over time from helping out with all marathons and after I completed Ace Attorney, I began to struggle with what to do over streaming on Twitch in general.

I love playing RPGs. They’re my favourite genre and it should come as no surprise to many as my favourite game is Chrono Trigger. However, I have always been happier playing them on my own and not to an audience. This has become a source of conflict for me as it is the only content I feel I can stream on Twitch right now.

Last year, I returned to playing sports by joining the local queer softball league. It is a sport I really enjoy and have played previously. My team is really spectacular and I like to be around everyone in a social setting too. It’s not often that a bunch of folks who had never met before come together to form a team that not only gets on well, but kicks ass at the same time.

My playing softball has proven to be a huge conflict time-wise with streaming. I honestly prefer not being at a desk all the time and even in winter I am avoiding streaming by hiking, birding, or taking up dancing, which is another thing I used to do regularly until my divorce.

So what does this mean for me and Twitch in the long-term? It does mean no more regular streams. It doesn’t mean I am going away entirely.

For example, if and when a new Ace Attorney game comes out, I will stream it aggressively. I feel like I should and I definitely want to. However, I do not want to maintain a schedule and I do not want to make a big production of my stream like I once did.

But what I won’t do is just stream for the sake of streaming. It isn’t healthy for me, isn’t compatible with my life, and it gets in the way of spending time with friends and family.

So this is not a goodbye, but it is time for me to nap.

-

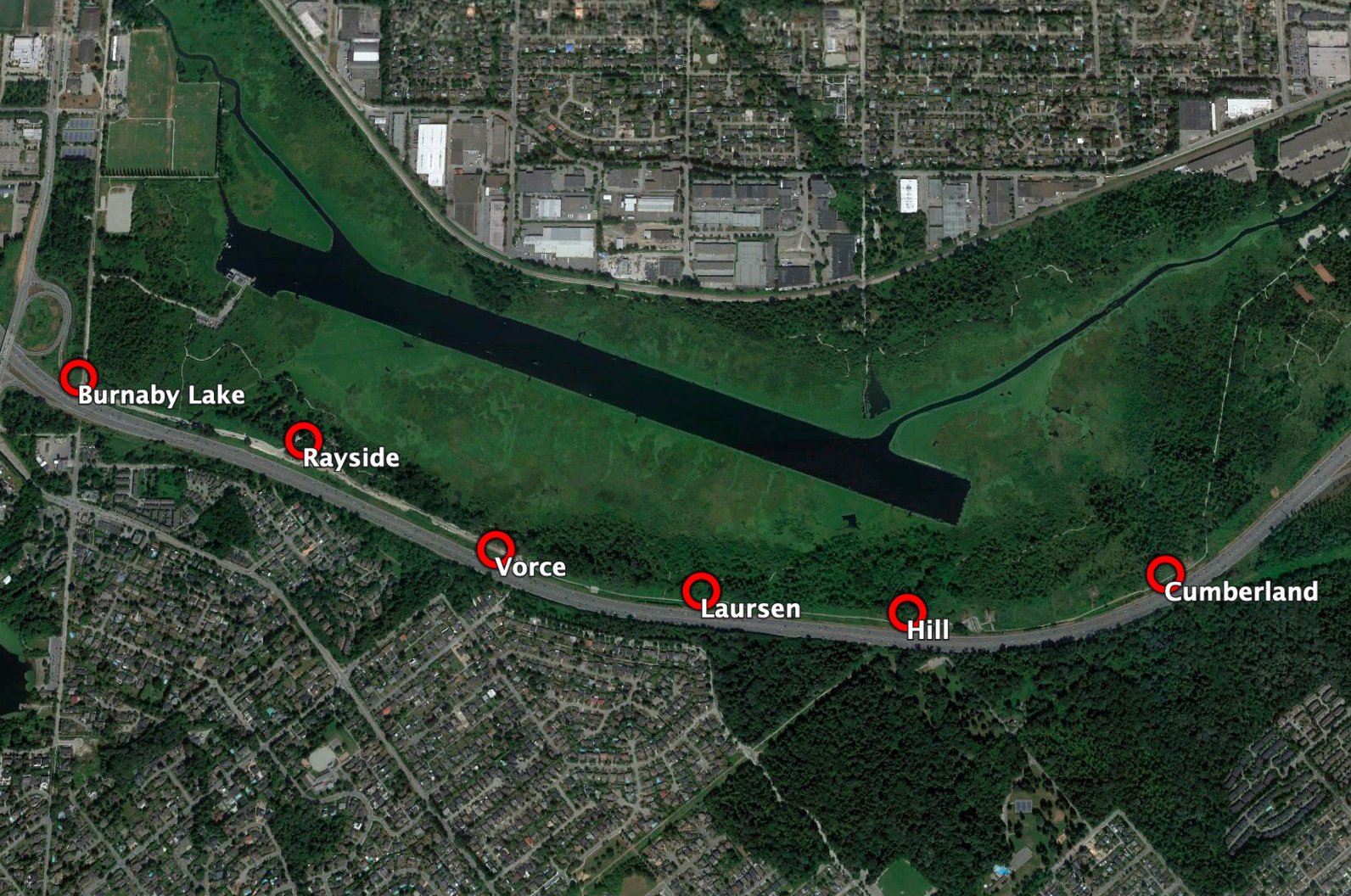

The Stations of the Burnaby Lake Stretch

Happy New Year!

If you live in Metro Vancouver and regularly listen to the radio like I do, you’ll often hear about this section of highway called the “Burnaby Lake Stretch” during traffic reports. This is a three kilometre section of the Trans Canada Highway and provides a vital east-west link within the region.

It also used to be a railway.

In 1964, the stretch was built over was previously the BC Electric Railway’s Burnaby Lake Line, which closed 11 years earlier. In fact, much of the line is followed by the current-day freeway from Cariboo Rd. in the east to Douglas in the west. However, you can still to this day follow the route via a parallel path in Burnaby Lake Regional Park.

Unlike the Central Park Line, the Burnaby Lake Line did not have any evidence of its existence survive at all due to the construction of a freeway to replace it.

However, fortunately you can find one of the stations although it has moved a kilometre and a half from its original location.

Vorce Station, which was once placed where the freeway passes the residential Nursery Street, was brought to the Burnaby Village Museum in 1977 and subsequently restored in 2008. It’s to my knowledge the only station structure outside of the New Westminster and Carrall Street termini of the BCER to survive to this day.

Additionally, the same museum has a BCER interurban you can walk into, but that is a post for another time.