ChatGPT and LLMs as a whole are dangerous for my job

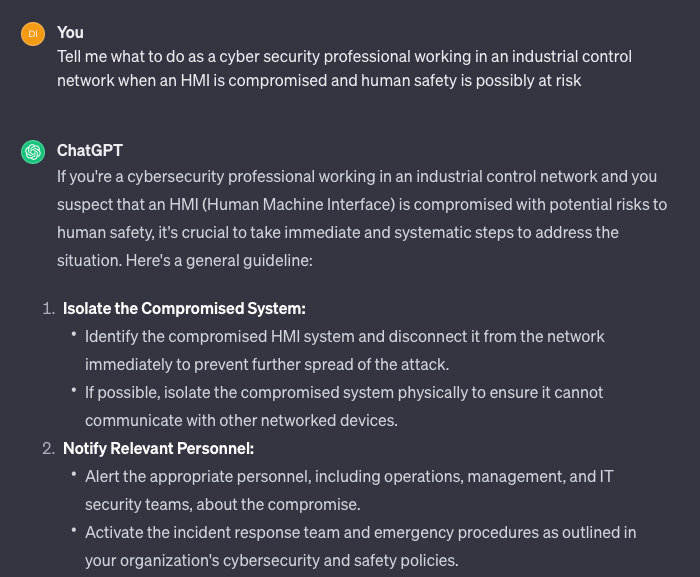

I decided to ask ChatGPT a question I once had during a job interview before being hired on at my current company:

Tell me what to do as a cyber security professional working in an industrial control network when an HMI is compromised and human safety is possibly at risk

And its immediate response was to give me this answer:

If you’re a cybersecurity professional working in an industrial control network and you suspect that an HMI (Human Machine Interface) is compromised with potential risks to human safety, it’s crucial to take immediate and systematic steps to address the situation. Here’s a general guideline:

- Isolate the Compromised System:

- Identify the compromised HMI system and disconnect it from the network immediately to prevent further spread of the attack.

- If possible, isolate the compromised system physically to ensure it cannot communicate with other networked devices.

- Notify Relevant Personnel:

- Alert the appropriate personnel, including operations, management, and IT security teams, about the compromise.

- Activate the incident response team and emergency procedures as outlined in your organization’s cybersecurity and safety policies.

This has me quite pissed off.

The thing that ChatGPT did here was assume that industrial control security is handled no differently than enterprise or general computing; this is dead wrong.

Industrial control involves human safety and taking an HMI out of the equation can actually make matters worse. There have been documented situations where making arbitrary decisions such as the recommended “disconnect it from the network” can trigger cascading effects or outcomes that are undesired.

Unplugging an HMI from a PLC and a control network as a whole could cause a ripple effect that puts human lives in danger. An HMI is basically a computer (but built to withstand a rugged environment) and a PLC (programmable logic controller) is the interface the HMI interacts with to make machines do things. I am simplifying things greatly here, but the point is that they’re not regular computers.

ChatGPT correctly states to notify relevant personnel, but this both fails to put this at the start and doesn’t mention someone like a plant manager or similar role. You need people who are familiar with the equipment to do things like lockout, shutdown, et cetera–in my field we call them “subject matter experts” or “SMEs”.

If I am to hear of a compromised HMI, my instinct is to talk to the person overseeing the operations first and get them to help mitigate it before even dreaming of disconnecting anything unilaterally. I do not want to be responsible for or creating increased risk for human casualties.

This scares the fuck out of me.